I spent a lot of time last week benchmarking the MSA 2000 storage arrays with a DSS/sequential workload. I’m going to run you through my process so you can see where I made breakthroughs in performance.

I initially decided that since we have 4 storage arrays, the best way to obtain maximum performance was to find the configuration that would give us the fastest single array performance. With 4 arrays this allowed me to carve each one a different way, then run my identical benchmarks, and get 4 result sets in a shorter amount of time than it takes to carve all 4 identically, and then recarve all 4.

To start out, I chose 4 possible configurations that I thought had potential for good performance (we have 48 disks + 1 global spare per array):

- Configuration #1: 4x RAID 5 devices of 12 disks each, striped into a RAID 0+5 configuration.

- Configuration #2: 4x RAID 5 devices of 12 disks each, split across both shelves (6 from each shelf), striped into a RAID 0+5 configuration.

- Configuration #3: 6x RAID 5 devices of 8 disks each, striped into a RAID 0+5 configuration.

- Configuration #4: 4x RAID 6 devices of 12 disks each – RAID 0+6 was not supported by this array, so I did the striping in Oracle.

After creating each of the underlying virtual disks, I then placed a total of 16 volumes of approximately 400GB each on top (the actual volume size varies depending on how the storage is carved, but anywhere from 360GB to 403GB each was obtained), and told the Oracle ORION benchmarking tool to set these up in a RAID 0 striped configuration across all 16, which Oracle likes to do to achieve greater performance.

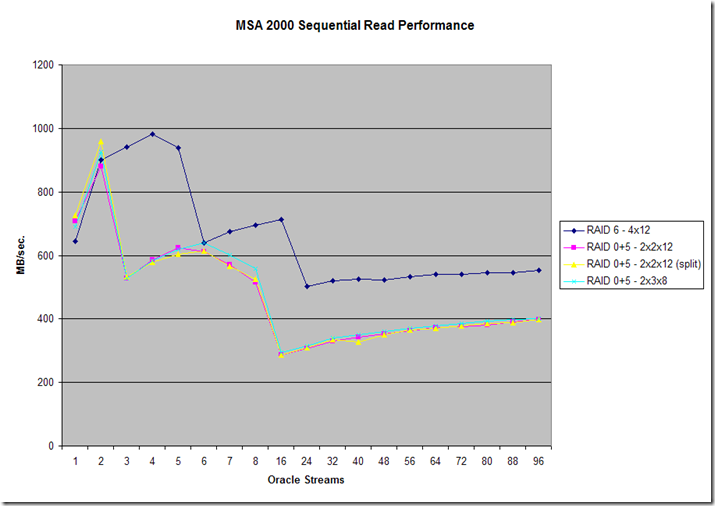

Here are the results of these initial tests:

The first thing that jumped right out at me is how much faster the RAID 6 configuration was than the other 3. I wanted to figure out why this was the case, when my past experience has led me to believe that RAID 6 is usually slightly slower than RAID 5. The only thing that was different was that the array only supports plain RAID 6, not RAID 6+0 or 0+6. For all of the RAID 5 tests, I was using the array’s capability of RAID 0+5 (they call it RAID 50) to stripe across multiple RAID 5 virtual disks. My theory was that for some reason, utilizing the array’s RAID 50 technology was harming performance.

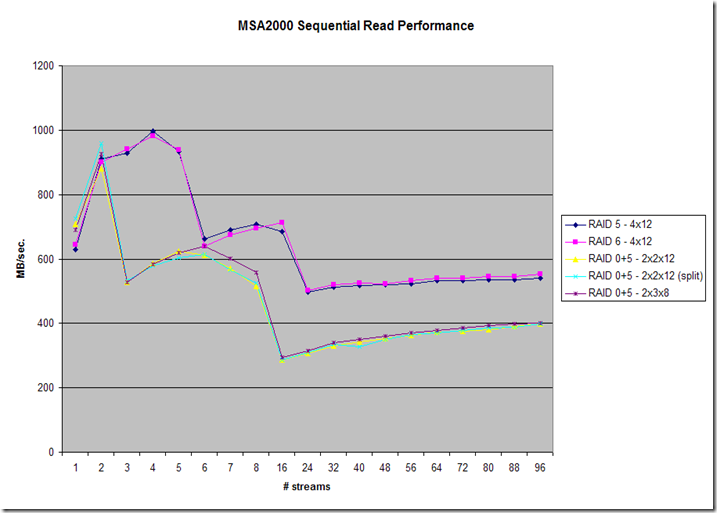

To test this theory, I ran a new test, this time without using RAID 0+5 on the array, simply carving 4 virtual disks of 12 disks each, in a RAID 5 configuration. Of course Oracle stripes across all 16 of the volumes I created in these 4 virtual disks, so the effect is the same, the only difference is that it’s being done in software now rather than hardware. Here are the results of those tests:

Now we’re getting somewhere! This was my first breakthrough: Determining that utilizing RAID 50 or RAID 0+5 capability at the hardware level was harming performance meant we could achieve greater performance by striping in software, and leaving that feature disabled on the storage array. As you can see, the difference between RAID 5 and RAID 6 is negligible in performance, so we could now make our decision based more on redundancy or availability as opposed to performance.

We were now topping out at around 1000 MB/sec, or close to 1GB a second of data transfer rate. This is not bad for a single array, but I knew the MSA 2000 could perform better. Now, to determine how to get that last bit of performance out of the storage.

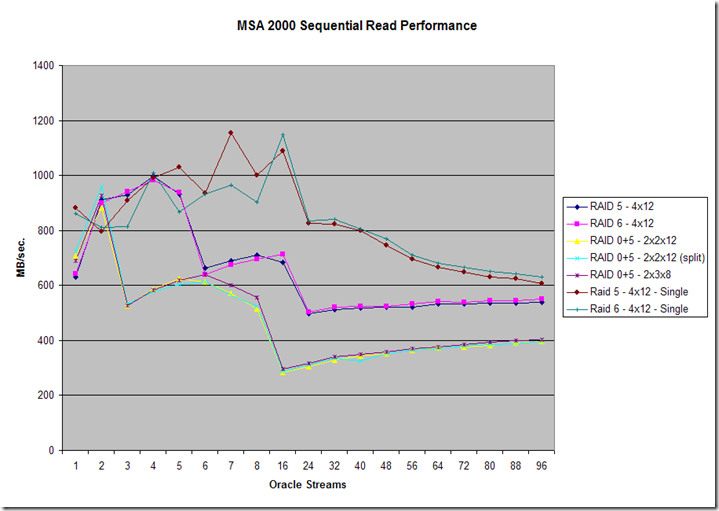

I had one more idea how we could improve performance. Up until now, I had been creating 16 volumes, 4 on each virtual disk, and using Oracle to stripe across them. Each virtual disk was either a RAID 5 or RAID 6 containing 12 physical disks. My theory now is that by creating 4 volumes in the physical disk, and causing Oracle to stripe across all 4, meant the hard disks were doing unnecessary seeking as each read or write had to access all 4 volumes simultaneously. I decided, rather than carving 4 volumes per virtual disk, to just carve one large volume per virtual disk, 4 total, and test like that:

My theory was correct. By creating only 4 volumes instead of 16, each volume containing the entire space of a RAID 5 or 6 virtual disk with 12 physical drives in it, we were able to achieve a maximum performance rate of about 1150MB/sec. This was a 15% improvement over our previous test results and beginning to reach the theoretical maximum of a fully loaded array of 1.24GB/sec.

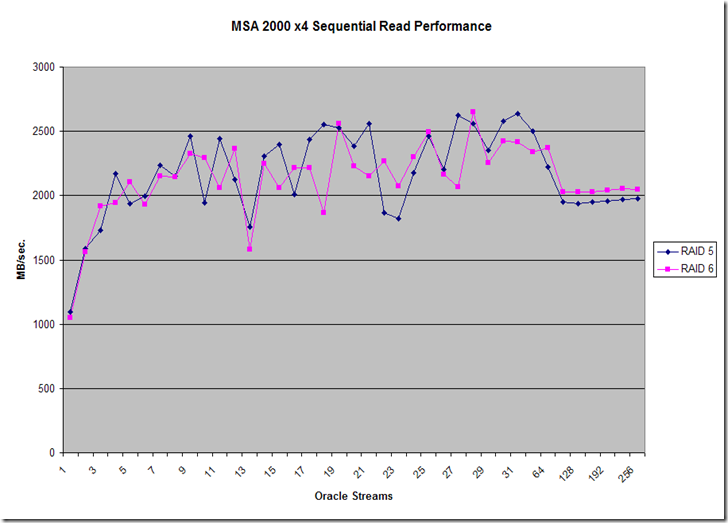

The last step was to take our two best performing single array configurations and deploy them across all 4 units, and test aggregate performance. Here are the results of that test:

This is looking really good. One thing I should mention is that with a single server, I can never fully saturate all 4 storage arrays. We are now reaching peak performance of around 2700 MB/sec, which is getting close to the limits of our host bus adapters. It will actually take at least 2 of these servers doing maximum sequential reads to fully saturate the capability of this storage.

Next steps:

- We’ve determined which RAID 5 or 6 configuration will give us the best performance. We need to decide if we want to use RAID 5 or 6. If we use RAID 5, we can withstand a single disk failure per 12 disks, and the global spare will be brought online immediately. If we use RAID 6 we can withstand 2 disk failures per 12 disks. Some other things to think about: with RAID 5, we end up with 25.6 TB usable space, RAID 6, we end up with 23.4TB usable space. If we decide to mirror across storage arrays for further redundancy, these figures are halved to 12.8TB RAID 5 and 11.7TB RAID 6.

- Optimal sizing for Redo logs and Archive logs: What I would prefer to do is present 16 total disks, 4 from each array, to achieve the best performance. If we go with RAID 5, each one of these 16 disks will be roughly 1.6TB in size. If we use RAID 6 each one will be roughly 1.45TB in size. I would recommend we partition them at the Linux level to take a small section from the beginning of each disk to use for Redo or Archive logs. For example, if we took 50GB from each disk, we would have 800GB total for Redo logs. Likewise for archive logs, taking 100GB from each disk would give us 1.6TB total for archive logs. I’ve scheduled a meeting with the DBA team to make decisions here.

All in all, this is looking like a very exciting and highly performing data warehouse solution. Now I want to figure out a way to benchmark more than one node simultaneously and obtain meaningful results. I suppose I could start 2 nodes running ORION simultaneously, and aggregate their results, however, it might not be ideal.

Any blog readers out there care to offer suggestions for benchmarking multi-node performance? SNMP data gathering at the SAN fabric level? Software benchmark tools?

No comments:

Post a Comment